I’m gradually continuing to make progress with FNIStash, gradually being the key word. It’s pretty tough to learn a new language, decode a file format, and plan a wedding at the same time.

Lately, I’ve been working on a Haskell module to decode the Torchlight 2 PAK file. The PAK file is a single archive file that contains all the configuration and asset files for the game (well, at least most of them). I’m hoping to read this PAK file and decode its contents so I can, for instance, grab the bitmaps for the various inventory items and use them in the GUI for FNIStash.

In writing the PAK module, I came tried a few different strategies for parsing the binary data, with varying levels of success. I won’t describe the PAK format here because it is already well described in the readme file included in CUE’s Torchlight II packer.

Objective

The PAK.MAN file associates a file path to a particular part of the PAK file. What I’d like to do is create a lookup table of all the files archived in the PAK file. The key would be the file path, and the value would be the right data out of the PAK file. I call this a PAKEntry. So the mapping would look something like

type PAKFiles = M.Map FilePath PAKEntry

with PAK entries being parsed with the following Get operation

getPAKEntry :: Get PAKEntry

getPAKEntry = do

hdr <- getWord32le

decSize <- getWord32le

encSize <- getWord32le

encData <- getLazyByteString $ fromIntegral encSize

return $ PAKEntry hdr decSize encSize encData

Attempt 1 – Using lazy Get

My first attempt used the lazy binary Get monad. This was also lazy on my part. My thinking here was that, since I already head a Get monad operation coded up that could parse out a PAKEntry, I could just add a line at the beginning of that operation that skips to the right part of the file. Something like

(skip . fromIntegral) (offset-4)

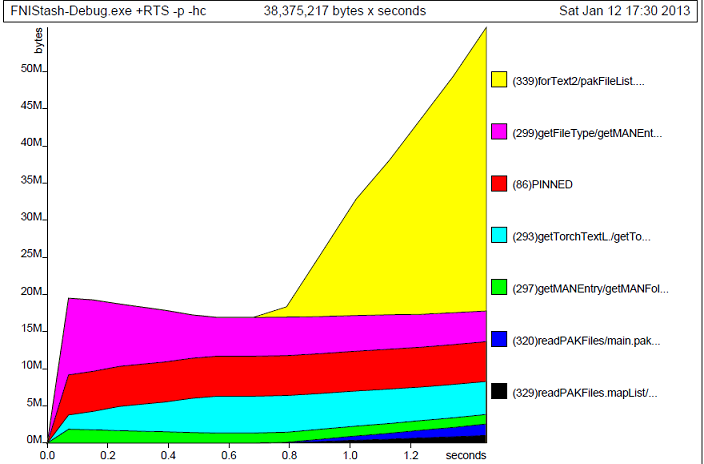

with the offset being passed in as a function argument (so the type signature would be Word32 -> Get PAKEntry). This worked, but was horrendously slow. What this does is read all the data of the PAK file into memory up to the offset (approximately), and then reads in as much more as it needs to parse out the PAK entry. For parsing entries near the end of the 800 MB file, this took a long time. I used this function to construct the table:

readPAKFiles :: FilePath -> FilePath -> IO PAKFiles

readPAKFiles manFile pakFile = do

man <- readPAKMAN manFile

pak <- BS.readFile pakFile

let fileList = pakFileList man

offsetList = pakFileOffsets man

fileOffsetList = L.zip fileList offsetList

f offset = flip runGet pak ((getPAKEntry . fromIntegral) offset)

mapList = L.zip fileList (L.map f offsetList) :: [(FilePath, PAKEntry)]

return $ M.fromList mapList

Attempt 2 – Using strict Get

Attempt 2 used the strict Get monad and strict bytestrings. The code is nearly identical to attempt 1. Here, the problem was that the BS.readFile function read in the entire contents of the PAK file to memory, then skipped to the right location of the PAK entry (instead of streaming the file into memory as necessary as it did with lazy strings). This was *much* faster, but required a huge 800 MB of memory to be read in just to parse out a tiny 26k PNG file (the file I was using for testing.).

Attempt 3 – Handle and lazy Get

Check out the type signature of the PAKFiles type. It maps a FilePath to a PAKEntry. No where in that definition is IO mentioned. So PAKFiles cannot do any IO, by definition. The previous two attempts have PAKFiles defined as a pure result of parsing binary data read through IO. What I really needed is for PAKFiles to have this type signature:

type PAKFiles = M.Map FilePath (IO PAKEntry)

Now, the result of a lookup operation is an IO action that retrieves a PAKEntry.

Reading the PAK entry lazily is the behavior I wanted to keep because I didn’t know how much data I needed to read in until after I started reading the entry. But I didn’t want to lazily read in and throw away the data in order to skip to the right part in the file. My next attempt used handles:

readPAKFiles :: FilePath -> FilePath -> IO PAKFiles

readPAKFiles manFile pakFile = do

man <- readPAKMAN manFile

let fileList = pakFileList man

offsetList = pakFileOffsets man

fileOffsetList = L.zip fileList offsetList

f offset = do

h <- openBinaryFile pakFile ReadMode

hSeek h AbsoluteSeek (fromIntegral offset - 4)

pak <- BS.hGetContents h

let parsedEntry = flip runGet pak getPAKEntry

hClose h

return parsedEntry

mapList = L.zip fileList (L.map f offsetList) :: [(FilePath, IO PAKEntry)]

return $ M.fromList mapList

There’s a problem with this, though. I kept getting errors that the handle was closed before I tried to read it!

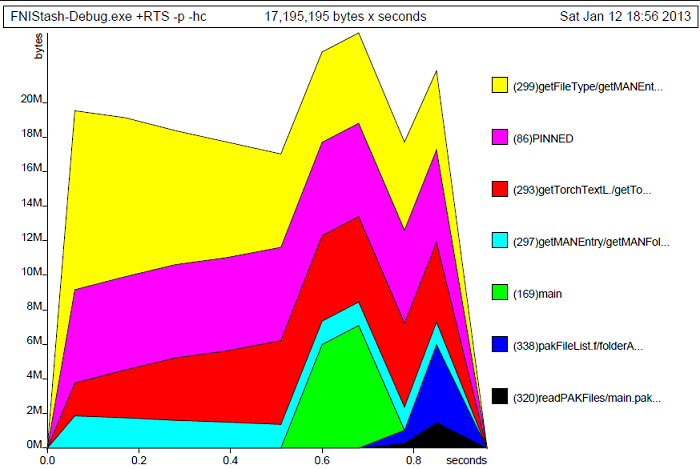

Attempt 4 – (Mostly) success with $!

The problem with attempt 3 is that runGet is executed lazily, but hOpen and hClose are not. When runGet is finally performed, the handle is already closed. This is easily fixed with the $! operator, which strict evaluates its argument:

readPAKFiles :: FilePath -> FilePath -> IO PAKFiles

readPAKFiles manFile pakFile = do

man <- readPAKMAN manFile

let fileList = pakFileList man

offsetList = pakFileOffsets man

f offset = do

withBinaryFile pakFile ReadMode (\h -> do

hSeek h AbsoluteSeek (fromIntegral offset - 4)

pak <- BS.hGetContents h

return $! (flip runGet pak getPAKEntry))

mapList = L.zip fileList (L.map f offsetList) :: [(FilePath, IO PAKEntry)]

return $ M.fromList mapList

This version of the operation is fast and uses a low amount of memory. By “low”, I mean 95 MB to parse out a 26k file. Something is wrong there, which is my next thing to investigate. Maybe that will make for another post.

– Update 01/23/2013 –

This post last ended with my creating a lookup table that generated IO actions that returned PAKEntry records. This turns out to be a horrible idea. Every time a lookup is made, IO has to be run. In order to do this, you need to pollute huge parts of your program with the IO monad. Instead, I reformulated this to be pure and more forward looking – you give the function a clue of what content you want in the table (such as a file path prefix), and it will read all of the necessary files into memory right then and there. After that, lookups can be done without the IO monad.